Portfolio Website Patrick Noten

New Generation DAF

Whilst working at PTTRNS.ai I got the opportunity to work on a project for DAF where you can place a truck in Augmented Reality or scan one of the real DAF trucks to show content (pictures, text, videos, url’s or PDF files).

In addition there are some animations on the AR trucks for several of the trucks features (steering wheel, lights, doors, matrass etc.).

Some of the challenges of the project were to be able to scan several trucks and show AR content on top of it. We decided to use Wikitude, which lets us create scans of the truck (a generated point map).

With the scan inside the app we can then recognize the truck and show content on its location, however with only Wikitude the placement of the content is lost when you are nog longer looking at the truck. To solve this we also used AR Foundation (Unity’s framework for both AR Core for Android and AR Kit for iOS) to remember the location of the scanned truck.

The regular AR placing is done by using AR Foundation, the Exterior of the truck can be placed anywhere and you can scale it and move it around. The interior of the truck will be centered on you behind the steering wheel and cannot be scaled or moved. The content shown on both the AR trucks and the scanned trucks are divided into separate categories, each of those can be individually enabled/disabled.

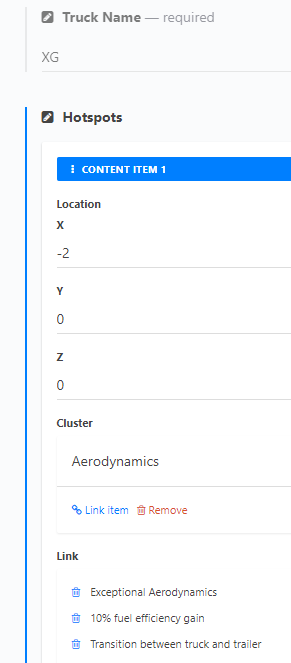

To be able to adjust the content, categories and all the information for each truck we used a CMS (Content Management System) called Cockpit. All the orbs shown, including the offset to the truck can be adjusted via the CMS.

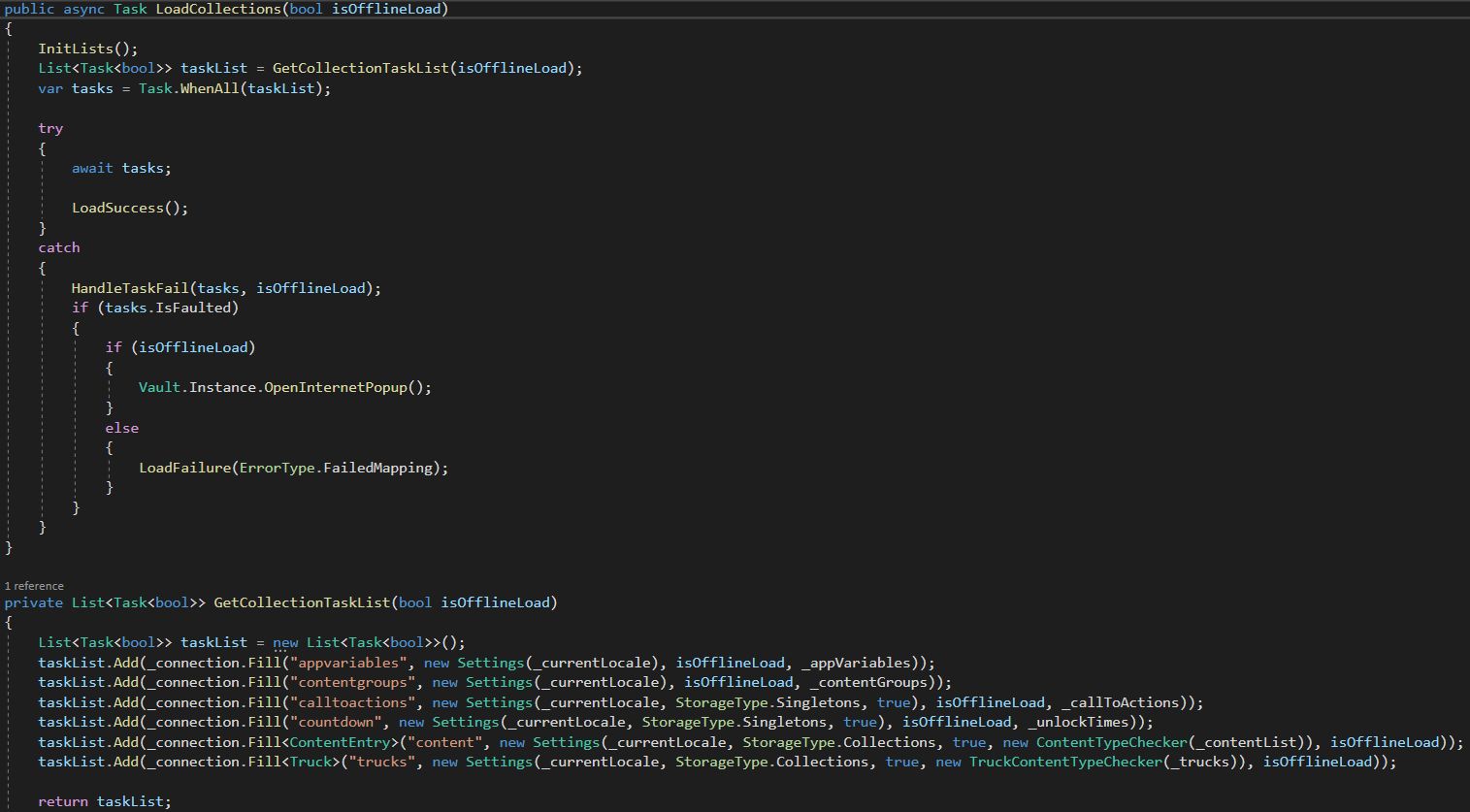

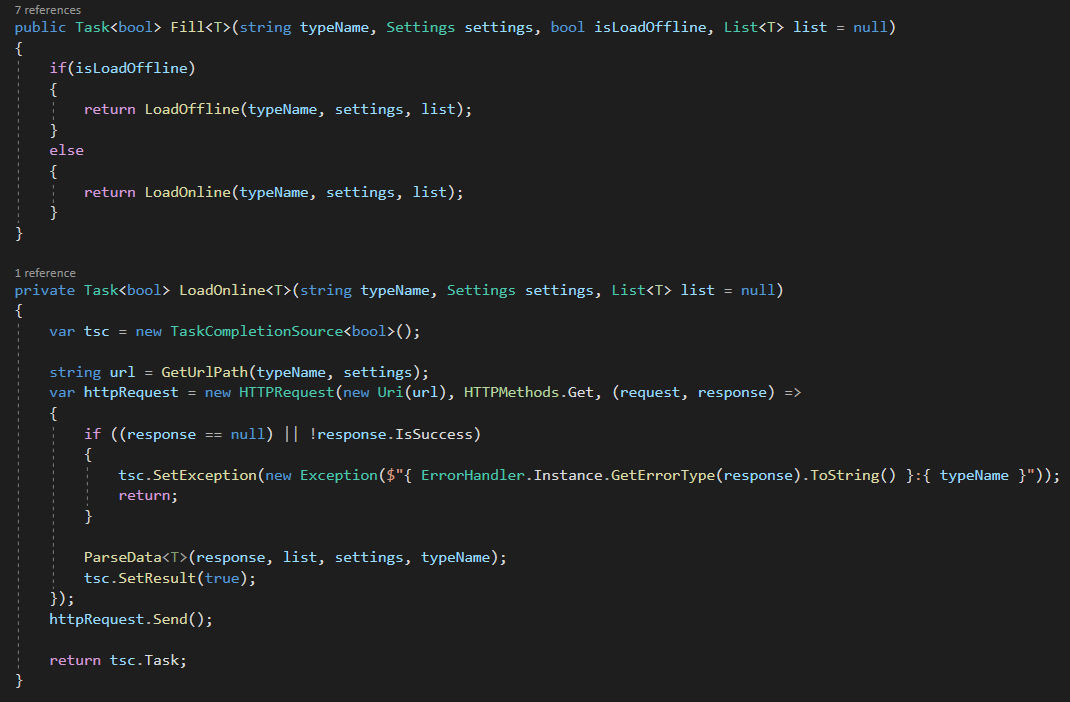

Here is a small code example showing how we load all of our content from the CMS, it handles HTTP requests and supports offline loading after the initial load. The json that is received is then parsed into objects and added to a list. We added tasks here to be able to wait for the results of all of the requests and only succeed when each individual one succeeds.